DRL | Deep Reinforcement Learning

Developing a Deep Reinforcement Learning Model to Simulate Pipe Networks

Deep reinforcement learning (DRL) is a major enabler of advanced robotic systems, especially when robots must operate

autonomously in complex, uncertain environments. DRL is the combination of Reinforcement Learning and Deep learning.

Reinforcement Learning

In Reinforcement Learning, the agent learns to maximize the cumulative rewards by interacting with its environment.

Deep Learning

Deep Learning utilizes neural networks such as MLPs or CNNs to analyze complex data and identify actionable patters.

Single Track Pipe System

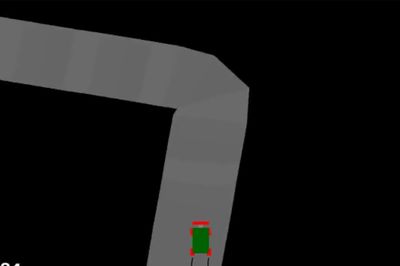

We have developed a Deep Reinforcement Learning model to simulate underground water pipe networks. An agent, modeled after our Mouse-Inspired Crawler, was trained to learn efficient navigation strategies through trial and error instead of hardcoded and pre-defined rules, which often don’t capture the complexity of real-world pipe networks.

Our DRL environment was built from the OpenAI Gym CarRacing-v3 framework, with additional features and modifications to more realistically simulate pipeline environment. For example, the track turn rate is increased from π/4 radians (45 degrees) to π/2 radians (90 degrees) to create sharper turns that more accurately simulate the bends of a real pipe system.

The agent was redesigned to more accurately model our physical robot. A reverse function was added so the agent can move both forward and backward, enabling it to recover when it becomes stuck inside the pipe. In addition, a braking function was implemented to allow the agent to stop completely. Together, these modifications significantly enhance the realism of the DRL simulation.

Multi Track Pipe System

To better reflect real-world pipe networks, we also implemented multiple tracks in the custom environment, allowing track segments to branch, merge, or run in parallel. We also implemented joint-detection and connection logic: the system generates “start” and “end” tiles at track boundaries and uses closest-point matching algorithm to connect discontinuous segments.

The wall fixtures were reconfigured to allow the agent to merge onto other tracks. At merge points, walls are placed only on the left and right side of the track, while standard four-wall fixtures are maintained elsewhere.

This work establishes the foundation for transferring DRL-trained policies into physical robots and ultimately deploying them in real-world pipe systems.

MICkey Innovations

301 North Harrison Street, Princeton, New Jersey 08540, USA

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.